Hello

2 Ollama Installs : The first on my Windows PC [call http://localhost:11434] and the Other On a Linux server [call http://ipserver:11434]

→ When i Configure Plugin AI on OnlyOffice Desktop i can use the 2 installs no problem for the config : i choose Ollama, modify the Url and take in the list the model.

→ When I want to set plugin IA on OnlyOffice Document Server [Docker instance] (work on dedicated server and used with Alfresco) I can configure OpenAI with the good Key but when i want to configure an Ollama Instance i can’t.

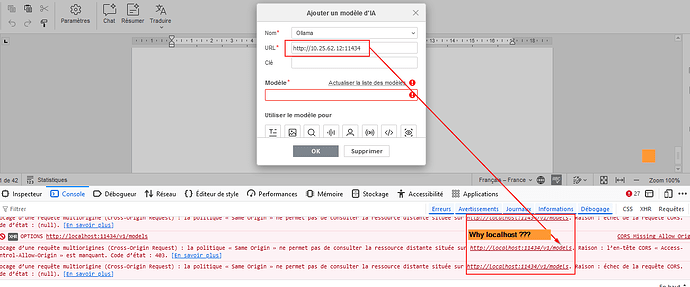

If i choose http://localhost:11434 I can see on the console of navigator CORS errors.

If i choose http://ipserver:11434 I can see on the console of navigator the same CORS errors. [ Errors Contain only the link localhost even i set http://ipserver:11434]

Can i configure CORS to by pass the errors or is it a bug ?

[OnlyOffice Desktop is OK and the last version installed for Desktop and Docker OnlyOffice Server]

Pfffff …

FAQ : Please note that Ollama is compatible only with the desktop version of the suite so you need to download ONLYOFFICE Desktop Editors for your OS to use this integration. For more information, take a look at this detailed guide in our blog.

I understand now.

Have you a plan to port ollama on Document Server ?

Hello @NetCHP

Technically, you can use Ollama in AI plugin with Document Server too, but before running Ollama on your server you need to pass additional parameter to avoid CORS issues:

export OLLAMA_ORIGINS=http://*,https://*

ollama serve

I hope it’d help.

It’s the same thing.

For me, the problem is not with Ollama but rather with OnlyOffice Doc Server.

Here is a screenshot of my PC from my browser connected to an instance of OnlyOffice Docs Server :

Behind the “CORS” issue, http://10.25.62.12, the IP of the Ollama server, is not retrieved. Only localhost appears. It’s as if localhost was hardcoded in OnlyOffice.

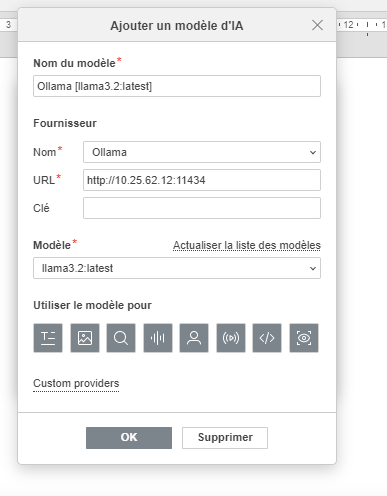

Here is a screenshot of my PC from OnlyOffice Desktop connected to the same instance of Ollama server :

It’s OK ! (and I was able to use AI through OnlyOffice Desktop to summarize a text)

My conclusion :

- Problèm “CORS” : Where put configuration in OnlyOffice Documents Server to byPass CORS Problem ?

- Config Ollama IA : It seems that the address http://localhost:11434 is hardcoded; it’s probably a bug. How do I force another IP address?

CORS is returned by the resource which is called. In this case it is Ollama. I’ve mentioned already how you should run it to avoid CORS.

You can try creating your own provider and define IP address there. For instance, like that:

"use strict";

class Provider extends AI.Provider {

constructor() {

super("Ollama", "http://<address>:11434", "", "v1");

}

}

How to use it:

- Get the code above;

- Create a Test_ollama.js file (you can do it with VSCode, for instance);

- Insert the code and specify IP address instead of

<address> at the beginning;

- Save changes to the file and put it into any accessible directory;

- Open the editor and launch AI plugin;

- Go the Add AI Model window;

- Click Custom Providers;

- In the Custom Providers window press + and select your

Test_ollama.js file from strep 4;

- Back into Add AI Model, in the drop down select the model named Test_ollama;

- (optional) You may need to update the list of models several times to re-generate the list.

This is not guaranteed to work as the CORS must be managed on Ollama side first, but you can try.