Hi mate, this is the result of the very last order (previous ones did not give any response)

~$ curl -v http://x.x.x.x:8000/healthcheck * Trying x.x.x.x:8000…

- connect to x.x.x.x port 8000 from x.x.x.x port 45300 failed: Connection refused

- Failed to connect to x.x.x.x port 8000 after 0 ms: Couldn’t connect to server

- Closing connection

curl: (7) Failed to connect to x.x.x.x port 8000 after 0 ms: Couldn’t connect to server

I suppose, the last one was done from your client to your server hosting NC and OO.

This should give you the same result as from the server himself, which really should work.

So if you didn’t get any response, not even from the host himself, this means that document server is not running or running on an different port.

Go an check log of the docservice from this file:

/var/log/onlyoffice/documentserver/docservice/out.log

It should tell you what port it uses and that it is running or not. Mine, as an example, says this:

[2024-11-13T08:53:35.469] ... - Express server starting...

[2024-11-13T08:53:35.475] ... - notifyLicenseExpiration(): expiration date is not defined

[2024-11-13T08:53:35.475] ... - notifyLicenseExpiration(): expiration date is not defined

[2024-11-13T08:53:35.705] ... - Express server listening on port 8000 in production-linux mode. Version: 8.2.1. Build: 38

I used “…” to shorten some unimportant info.

Something doesn’t add up here. Your server is obviously running (welcome site is reachable from outside), but when you can’t connect to the default port 8000, it means you are maybe using the wrong IP (wrong host) or the host is encapsulated in some environment like a VM.

Are you 100% sure, you are running that stuff on the host directly and that IP you are using is correct?

Let me explain briefly my OO+NC architecture.

Both are installed on Ubuntu server with the very same IP according to the tutorial I mentioned in my very first post.

Ubuntu is installed on a VirtualMachine in TrueNAS which has a different address given by DHCP to its physical HW card.

VM is connected to the network through a virtual network card named br0 and created in an environment of Truenas during their installation.

I use pretty much the same system over br0 for two other VM’s. They are both accessible from the Internet through a Nginx proxy manager running on the other HW I have, and it converts HTTPS/HTTP. Betwen this NPM and VM’s in internal network is just HTTP traffic.

Could the problem be hidden somewhere there?

The best solution for me woul be to kill nginx on OO and NC but I haven´t found any liveable scheme yet.

Anyway, in the log you mentioned there are these messages repeated every hour.

[2024-11-13T18:54:18.496] [WARN] [localhost] [docId] [userId] nodeJS - sqlQuery error sqlCommand: SELECT * FROM task_result WHERE last_open_date <= : error: relation “task_result” does not exist

at /snapshot/server/DocService/node_modules/pg-pool/index.js:45:11

at runMicrotasks ()

at processTicksAndRejections (node:internal/process/task_queues:96:5)

[2024-11-13T18:54:18.497] [ERROR] [localhost] [docId] [userId] nodeJS - checkFileExpire error: error: relation “task_result” does not exist

at /snapshot/server/DocService/node_modules/pg-pool/index.js:45:11

at runMicrotasks ()

at processTicksAndRejections (node:internal/process/task_queues:96:5)

Is it useful?

To be clear - For the other proxy I mentioned I use port forwarding on my router with different ports, so 80 a 443 are open for internal address of Ubuntu with OO+NC.

Ok,that explains everything

You stated that you wanted to install everything on the host, but that host is still totally virtual. The instructions from the wiki you mentioned are not meant for that. That is the problem.

Let me think, if I can rap that around my brain to see how we have to address everything so the services can be accessed inside the VM.

You have already errors regarding sql-querries, so you OO installation seems broken.

Regarding your Ubuntu-VM on TrueNAS, you should bridge your VM’s-network with your TrueNAS-host, so that your VM has a real lan-IP in your local network. This way you can really work with IP addresses. Otherwise you need forwarding, which requires a more complex approach.

I believe you are using addresses that aren’t resolved in your local lan. Once you brigde your VM and your lan, you will expose your ubuntu-VM as a host to your network. This way the instructions in the wiki can be applied in my opinion.

One last idea. According to my understanding of OO and NC this actually should work:

Alternativley, you can put instead of “127.0.0.1” your VM’s ubuntu’s hostname, as it should be resolved to 127.0.0.1 anyway. Check the “/etc/hosts” file on your ubuntu-VM.This is for example the way my hosts file looks like on my notebook:

127.0.0.1 localhost

127.0.0.1 bermuda-NB

My notebook’s host name is bermuda-NB. So a call to http://bermuda-NB is redirected to 127.0.0.1 port 80.

Hmm, to be honest, I’m not sure your suggestions are 100% valid. VM is bridged correctly all the time and I used to use connection btw OO a NC without any advanced server settings in the menu of the connection app for a quite long time. Therefore I suppose the problem is not in this chapter.

Overall, I’ll try a clean common installation of Ubuntu and OO+NC on separate HW tomorrow to prove to myself that this combination is able to work whatsoever.

In case not, everything will be thrown into the towel and I will use the old stable solution with two separate VMs.

Thanks

Hello.

Since I can’t really see what hosts/IPs you are using, I have to make sure we are on the same page about your setup, which we really weren’t. Just yesterday I discovered that your were hosting your OO and NC service on a VM inside a TureNAS installation.

But the tests I suggested did not produce the outcome they should. So to speak your have some issues with your network setup or somehow you misinterpret the IPs your are using. You can’t reach your OO service from your lan and your host it’s running on. This means the document-server is not running … or your configured network setup is not what you believe it to be. But there is the fact that your OO is reachable from outside via your dyndns redirection. This leaves me to believe your network setup is broken. If you say that x.x.x.x is your OO service, but you can’t reach it via curl to retrieve healthcheck, you are clearly banging on the wrong door here. So check your network configuration. You must know your setup and how to put a call with curl to reach your service, either healthcheck or the welcome page.

This is how I solve issues. I probe basic stuff to see what is working and what not and of course I check the logs to see what happens inside the service. This way I always find what I have missed or wrongly set up.

Don’t forget NC. You have to configure it too to run OO. Trusted domains, and encryption have to be set up correctly.

You don’t have to share your internal IPs. You can convert them to be used in here. When your host is set up as 192.168.20.100 you can substitute that with 192.168.1.10. This way you can share that information. Whenever someone says you need to put 192.168.1.10 in that config, you substitute it back into your real hosts IP, which only you know.

If you don’t mind, I will join this thread too.

I agree with @bermuda that most likely the situation is related to incorrect deployment or network rules.

Dear @tomas_427, we always recommend to deploy Document server on the separate clean server to avoid dependency and port conflicts.

The mentioned guide in your first post wasn’t tested so we cannot guarantee that it works.

As for the situation in general, the docservice logs shows database error, as @bermuda has already mentioned. Something went wrong with Postgres and it impacts the situation as well. You can reproduce the issue once again and collect out.log files from docservice and converter folder to check out, if there’re any new error entries.

Is it possible to deploy the Document server form scratch on the separate server?

One more thing, what is the version of the connector app?

The difficult part is, that @tomas_427, obviously for security concerns, can’t really share his configuration in full. But I have thought about his approach and, beside the database errors, he needs to expose the two services to each other via the configuration in nginx-proxy connection.

That is why my first idea was to put in a upstream directive into his main nginx-configuration.

But to make it more convenient, this should be done like this (only for nginx configurations):

# file location /etc/nginx/conf.d/ds.conf

# definition of the document-server host for internal services

server {

listen 127.0.0.1:80;

listen [::1]:80;

server_name onlyoffice;

server_tokens off;

include /etc/nginx/includes/ds-*.conf;

}

And the nextcloud host/service should have this added to it’s nginx configuration, if it is not already defined by default (can’t say myself at this moment):

# file location /etc/nginx/conf.d/nextcloud.conf (default nginx config for nextcloud)

# definition of the nextcloud-server host for internal services

server {

listen 127.0.0.1:80;

listen [::1]:80;

server_name nextcloud;

# ... other stuff already configured ...

Additionally the hosts should be updated with the internal names too:

# file location /etc/hosts

# simply add virtual host names to the existing line for 127.0.0.1

127.0.0.1 localhost onlyoffice nextcloud

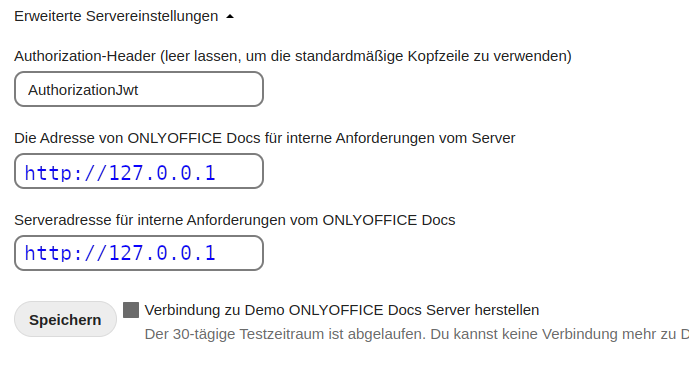

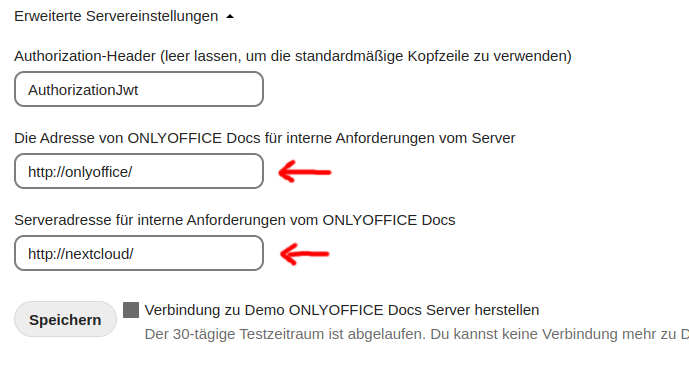

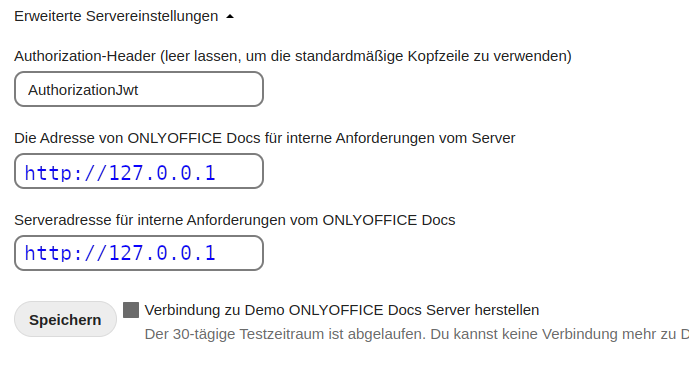

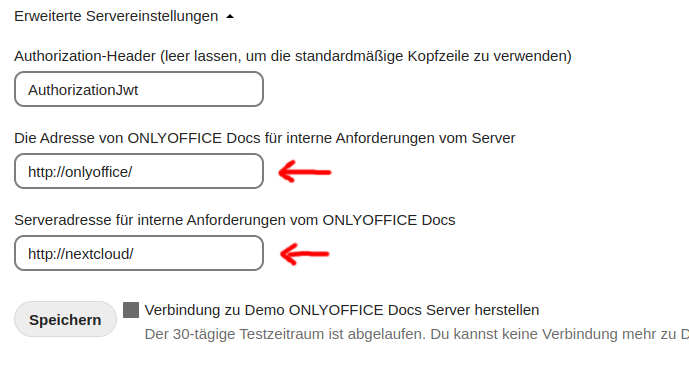

Then both services can be configured in the connector app as followed:

Like I said, this would only apply for pure nginx-setups. I myself don’t mix apache and nginx anymore. This should be doable. I have the resourses and when I find some time, I would test that scenario myself.

Other than that I would advise others to use docker if possible. People can’t break stuff that easily in docker and because of it’s popularity there is way more info and tutorials how to set up stuff this way.

Guys, I separated both engines today to different VM+Ubuntu, configured certbot on both of them as a DNS challenge way, and everything works like a charm.

Dear bermuda, let me say there is no configuration needed in both cells we fought with together for a few days based on your ideas.

1 Like

Hello again.

I was able to install NC and OO on a Ubuntu server 24.04 in virtualbox on my desktop and make it work

But I installed both OO and NC via snap. After that I only had to configure the nginx reverse proxy I also installed via apt. Basically I did what I described before but with details to match my environment. I didn’t use certbot and thus did configured only http access.

All I had to do was to create 2 redirects, one to my virtual host nextcloud running on port 81 and one for onlyoffice running on it’s default port 80 to 82. I didn’t use subdomains but subfolders to separate and configure the hosts, so I had to rewrite that too. I didn’t bridge the network, but only forwarded port 80 on the ubuntu guest to my desktop host to 21080. Therefore I had to apply overwrite-configuration in NC to match that address. And as I mentioned earlier, I also had to add the virtual hosts to ubuntu’s hosts-configuration to get the name resolved back to the host himself under 127.0.0.1. Healthcheck worked, I only got stuck litte bit on the jwt-token as there is not much info on that in connection with snap.

Snap was a little bit of a gamble, but it is way better, because you get a fully working isolated service. It’s easier to update and there are no issues with configuring databases and other services needed.

[UPDATE]

Applying that to subdomains should be easier.

Using a proxy is only needed when one has more virtual hosts accessible through ports 80 and 443, other than that it is not really needed as port forwarding will take care of that in a case where only OO + NC are being used. I would then put OO on an non standard port like (8443), as it is only a backend for the docservice itself and put NC on 80/443. The connector app would only include the address of the subdomain + port where docservice is accessible while internal addresses will be left empty (by default they get the proper external calls configured there anyway). Using SSL with proper certificates should be configured via “snap … set key”. This, of course, also applies to other settings like passwords/ports, etc. needed to secure and setup for the given environment.

1 Like

@tomas_427 We’re glad that the situation is resolved.

@bermuda thank you for your detailed guides, I hope they will help other users in the similar cases.

![]()