Hello,

I am integrating OnlyOffice into my system to embed it within my React app. As part of the setup, I have configured an S3 bucket to be used as a document cache, following the instructions outlined here: What it means to setup S3 as cache to docs.

The Document Server should remove old documents from the cache automatically after 24 hours. However, I have noticed that my S3 bucket still contains documents that are several days old.

I would like to understand the following:

- How can I resolve this issue to ensure that old documents are properly removed after 24 hours as expected?

- Is there a specific log file or configuration where I can check for errors or debug this behavior?

I appreciate any guidance or troubleshooting steps you can provide. Thank you!

Hello @leandro

Document Server indeed should clean specific items from cache in 24 hours. However, please note that the clean-up is initialized according to the cron job that checks PostgreSQL database for any items older than 24 hours. It is important to keep in mind that in the table task_result has two colums: created_at which defines first opening of the document and last_open_date which defines last opening date of the document. When cron job is executed, it looks for the records of the last_open_date column and checks if the time specified there for items in cache is more than 24 hours, then cleans up cache. Considering it, if you are accessing files after the first opening, then the date refreshes and during a clean-up Document Server skips the items that are not older than 24 hours. Also, just a side note, Document Server during a clean-up also checks table doc_changes and, if there are any records for specific item, it also skips them.

You can check records in database by connecting to it with psql -U <username> -h localhost (username is located in local.json config), then entering the password that is also located in local.json, then using SELECT * FROM task_result; and SELECT * FROM doc_changes; queries to print out contents of these tables.

Please do not manually modify records in the tables as it may cause various issues with Document Servers’ functionality.

I hope it helps.

Hi @Constantine Great explanation! I was able to see records in the DB, however I see records for only the last day (since yesterday) and not older ones which seems to be deleted from the DB but not from the S3 cache, in the S3 cache they are being accumulated. Any idea on what could be happening or how to debug this?

Thanks!

Can you share Document Server logs? Maybe we can find some information about those file. Also, if possible, please share some keys from your S3 cache that are still in the storage, but no longer exists in the database. Additionally, please specify version of Document Server and its installation type.

Logs are located:

- for Windows installation in C:\Program Files\ONLYOFFICE\DocumentServer\Log;

- for DEB/RPM packages installation in /var/log/onlyoffice/documentserver/;

- for Docker installation in the same directory as for packages, but inside the container with Document Server.

You can download server logs here

Keys from S3 that should not be there:

RmlsZVR5cGU6NjczZGU5MDJjZDcyOTc4NGE0NzIxY2Fk

RmlsZVR5cGU6NjcyYTU3MDFkMzIxYjZjNmZlYzA2N2E0_3596

RmlsZVR5cGU6NjczZGU5MDJjZDcyOTc4NGE0NzIxY2Fk

Doc server Version 8.2.0.143

Installation type: ONLYOFFICE Amazon Machine Image available on the AWS Marketplace, ONLYOFFICE Docs Developer Edition (250 connections)

Thanks!

Please send me the product details of AMI in PM.

Sent to you a PM with the details

Thank you.

I found some records about these entries, they seem to be the same file. Generally, I see that there was an error that has prevented file from being saved back to the storage and, eventually, it is now in forgotten directory:

[2024-12-24T20:43:25.307] [ERROR] [localhost] [RmlsZVR5cGU6NjczZGU5MDJjZDcyOTc4NGE0NzIxY2Fk] [VXNlclR5cGU6NjcwZWMzOWVjZjA5MTZiM2UwYzQzYmMz1] nodeJS - sendServerRequest error: <truncated> Error: Error response: statusCode:500; headers:{"date":"Tue, 24 Dec 2024 20:43:25 GMT","content-type":"application/json","content-length":"59","connection":"keep-alive","access-control-allow-origin":"*","server":"Werkzeug/2.0.3 Python/3.11.10"}; body:

{"error":"Failed to save document changes or clear cache"}

[2024-12-24T20:43:25.321] [WARN] [localhost] [RmlsZVR5cGU6NjczZGU5MDJjZDcyOTc4NGE0NzIxY2Fk] [VXNlclR5cGU6NjcwZWMzOWVjZjA5MTZiM2UwYzQzYmMz1] nodeJS - storeForgotten

Do I understand correctly that they are actually located in forgotten directory and it is bothering you?

In general, Document Server stores files in forgotten in case of failed saving for them to be recovered.

Sorry about that I send to you the same ID twice

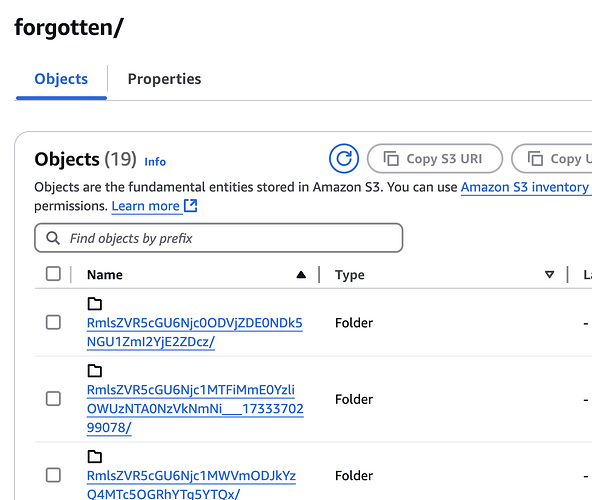

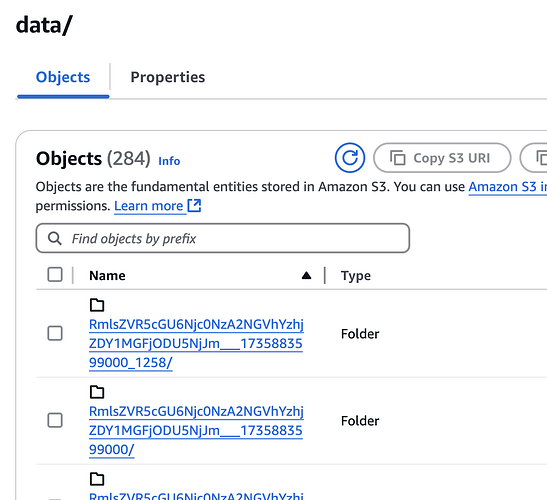

Yeah you are right these ones are in the forgotten folder but my forgotten folder only have 19 objects and inside data I have 284 objects, look at the attached screenshots.

Here are other examples that are not in forgotten and still in data folder:

RmlsZVR5cGU6Njc0NzA2NGVhYzhjZDY1MGFjODU5NjJm

RmlsZVR5cGU6Njc0OTdhMzhmYzVkYmY5YzIwM2MwMTJm

RmlsZVR5cGU6Njc1MTFjYTdkMjRlNmU1NjYyYzZiYzMz

Thanks!

Are these files absent from the database? According to the log, they were never stored to the forgotten, even though to each of them an error has occurred.

So, now you have approx. 284 files in the S3 cache, but how many entries do you have in task_result?